This course Optimization Methods is oriented for graduate students from applied statistics at the department of mathematics, Jinan University. It emphasizes on theoretical understandings of modern optimization methods for data science, particularly the first-order methods, since implemtentation is easily accessed via LLMs (e.g. DeepSeek, ChatGPT, e.t.c.).

It is unrealistic to read all the materials listed below in one semester, but students must read some of them in order to finish a qualified essay.

The materials are collected and reorganized mainly from:

- H. Liu, J. Hu, Y. Li, Z. Wen, Optimization: Modeling, Algorithm and Theory (in Chinese)

- Beck, A. Introduction to Non-linear Optimization: Theory, Algorithm, and Applications in MALTAB, SIAM, 2014.

- Beck, A. First-Order Methods in Optimization, SIAM, 2017.

- Gartner, B., He, N., and Jaggi, M. Lectures notes on Optimization for Data Science.

- Nesterov, Y. Lectures on Convex Optimization, Springer, 2018

- Nesterov, Y. Lecture notes on Modern Optimization in 2024 summer school at Peking University.

- Lan, G. First-order and Stochastic Optimization Methods for Machine Learning, Springer, 2020.

ALL THE MATERIALS ARE INTENDED FOR NON-PROFIT ACADEMIC USE. IF THEY ARE PRESENTED IMPROPERLY, PLEASE EMAIL ME TO REQUEST REMOVAL.

Syllabus

- Lecture 1 Mathematical Preliminaries [notes]

- Optimization in Data Science

- Inner Products and Norms

- Differentiability

- Optimiality Conditions for Unconstrained Optimization

- Attainable of Minima

- Reading

Domingos, Pedro. “A few useful things to know about machine learning.” Communications of the ACM 55.10 (2012): 78-87. [A classic paper presents the practical philosophy of machine learning.]

Cai, Jian-Feng, Emmanuel J. Candès, and Zuowei Shen. “A singular value thresholding algorithm for matrix completion.” SIAM Journal on optimization 20.4 (2010): 1956-1982. [Appoximated minimization of nuclear norm]

Recht, Benjamin, Maryam Fazel, and Pablo A. Parrilo. “Guaranteed minimum-rank solutions of linear matrix equations via nuclear norm minimization.” SIAM review 52.3 (2010): 471-501. [Translate rank minimization to nuclear norm minimization]

Vidal, René, Ma, Yi, and S.S. Sastry. “Generalized Principal Component Analysis”. Springer New York, NY. 2016. Chapter 2 [Application of L21 norm and nuclear norm minimization]

- Reading

- Lecture 2 Smooth Convex Functions [notes]

- Smooth functions

- Smooth convex functions

- Strongly smooth convex functions

- Lecture 3 Gradient Descent [notes][python demo][matlab demo]

- Vallina Gradient Descent

- Acceralated Gradient Descent

- Reading

- Kingma, Diederik P., and Jimmy Ba. “Adam: A method for stochastic optimization.” ICLR (2015).[Adam, one the most frequently used optimizer for deep learning]

- Loshchilov, Ilya, and Frank Hutter. “Decoupled weight decay regularization.” ICLR (2019).[AdamW]

- Reddi, Sashank J., Satyen Kale, and Sanjiv Kumar. “On the convergence of adam and beyond.” ICLR (2018).[Adam fails to convergent]

- El Hanchi, Ayoub, David Stephens, and Chris Maddison. “Stochastic reweighted gradient descent.” International Conference on Machine Learning. PMLR, 2022.

- Reading

- Lecture 4 Coordinate Descent and Conjugate Gradient Descent [notes] [notes]

- Reading

- Bansal, N., & Gupta, A. (2017). Potential-function proofs for first-order methods. arXiv preprint arXiv:1712.04581.[Construction of potential functions for convergence analysis]

- Karimi, H., Nutini, J., & Schmidt, M. (2016, September). Linear convergence of gradient and proximal-gradient methods under the polyak-łojasiewicz condition. In Joint European conference on machine learning and knowledge discovery in databases (pp. 795-811). Cham: Springer International Publishing.[PL inequality for convergence analysis of gradient descent]

- Shewchuk, J. R. (1994). An introduction to the conjugate gradient method without the agonizing pain.

- Nocedal, J., & Wright, S. J. (Eds.). (2006). Numerical optimization. 2nd Edition, New York, NY: Springer New York. Chapter 5.

- Reading

- Lecture 5 Projected Gradient Descent [notes]

- Optimization over convex sets

- The orthogonal projection

- The gradient projection method

- Lecture 6 Frank-Wolfe Algorithm [notes]

- Reading

- Jaggi, Martin. “Revisiting Frank-Wolfe: Projection-free sparse convex optimization.” International conference on machine learning. PMLR, 2013.

- Ding, Lijun, et al. “Spectral frank-wolfe algorithm: Strict complementarity and linear convergence.” International conference on machine learning. PMLR, 2020.

- Dvurechensky, Pavel, et al. “Self-concordant analysis of Frank-Wolfe algorithms.” International Conference on Machine Learning. PMLR, 2020.

- Zhou, Baojian, and Yifan Sun. “Approximate Frank-Wolfe Algorithms over Graph-structured Support Sets.” International Conference on Machine Learning. PMLR, 2022.

- Reading

- Lecture 7 Subgradient Methods

- Lecture 8 Mirror Descent [notes]

- Bregman divergence

- Mirror Descent

- Reading

- Beck, A. First-Order Methods in Optimization, SIAM, 2017, Chapter 9

- Example 9.19

- Example 9.29

- Beck, A. First-Order Methods in Optimization, SIAM, 2017, Chapter 9

- Reading

- Lecture 10 Conjugate Functions and Smoothing

- Convex conjugate theory [notes]

- Smoothing techniques

- Lecture 11 Proximal operator and Proximal Gradient Descent [notes]

- Proximal operators

- Proximal point algorithm

- Proximal gradient descent

- Non-Euclidean proximal gradent methods [notes]

- Beck, A. First-Order Methods in Optimization, SIAM, 2017, Chapter 10

- Example 10.76

- Beck, A. First-Order Methods in Optimization, SIAM, 2017, Chapter 10

- Lecture 12 Stochastic Optimization [notes]

- Stochastic gradient descent

- Reading

- Nitanda, Atsushi. “Stochastic proximal gradient descent with acceleration techniques.” Advances in neural information processing systems 27 (2014).

- Li, Zhize, and Jian Li. “A simple proximal stochastic gradient method for nonsmooth nonconvex optimization.” Advances in neural information processing systems 31 (2018).

- Gower, Robert M., et al. “Variance-reduced methods for machine learning.” Proceedings of the IEEE 108.11 (2020): 1968-1983.

- Xiao, Lin, and Tong Zhang. “A proximal stochastic gradient method with progressive variance reduction.” SIAM Journal on Optimization 24.4 (2014): 2057-2075.

- Reading

- Stochastic gradient descent

Lecture 13 Primal-Dual Algorithm

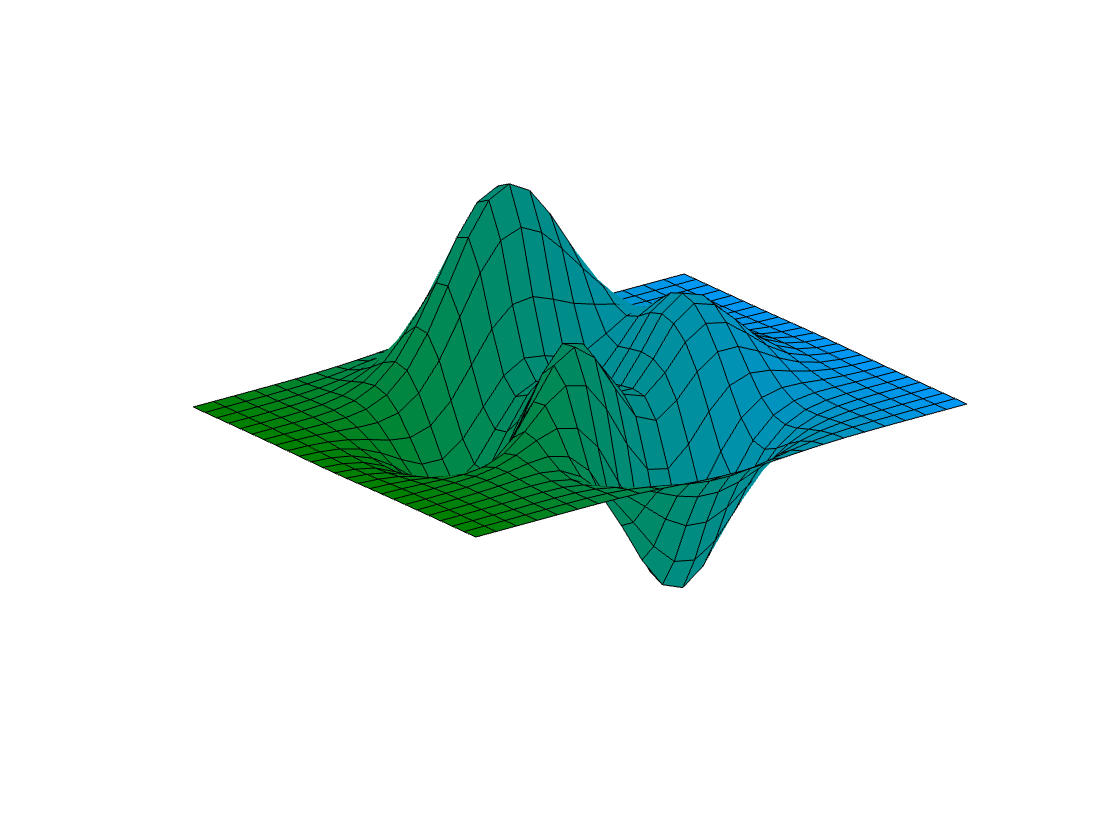

- Lecture 14 Nonconvex Optimization

- Trajectory analysis

- Escaping saddle points

- Lecture 15 Multi-objective Optimization and Its Applications on Multi-task Learning

History

- [2024-09-21] Create this webpage.